The project I’ve worked on during my time at Insight Data Science in New York City is called InspectCare: Using Online Activity to Identify Illegal Childcare Operations. This was done as a consultation with a city agency over three weeks.

Many illegal childcare centers operate in New York City, and when something goes wrong, they can make big headlines and attract a lot of attention. Who wouldn’t be outraged at kids dying in poorly run childcare centers? Oftentimes the parents don’t even know these centers are illegal; they operate so openly that they give the impression that they’re legitimate. Naturally, people expect the city government to either enforce regulations, or close these places down.

To that end, the city does what it can to monitor for illegal activity, and it will make further investigations if anything is found. This includes monitoring the internet, which is no small task! It takes a lot of time and effort for workers to keep tabs on the many websites out there.

Scraping Craigslist

A particularly difficult website to monitor is Craigslist. The posts are often poorly formatted, and there’s a high volume of content with low quality. There are roughly 2000 posts a week, after which they expire and are removed from the site. For my project, I wanted to see what could be done to improve the activity monitoring here.

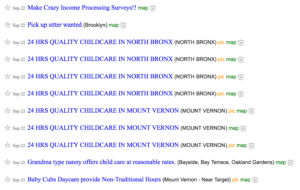

There is a childcare subforum, however there are still a few problems. For one, there are a lot of duplicates. For another, there are a few distinct subcategories that aren’t labeled.

As you can see in the above screenshot, there are posts for childcare centers, nannies and babysitters, as well as various other things like tutors, transportation, and classic spam. This is a good place to apply a classification algorithm.

To start, we first scrape the data from Craigslist using the Scrapy Python package. The main information will be taken from the body of the post. It’s only possible to get one week at a time this way, since older posts expire.

Classifying Posts

The data from Craigslist isn’t already labeled into the three categories: Childcare Center, Babysitter/Nannies, Other. There aren’t any unsupervised machine learning algorithms appropriate for this problem, so I had to go and do the dirty work of labeling many (619) of the posts myself in order to establish some ground truth data.

Once the corpus of Craigslist posts is collected and a portion is labeled, some NLP (natural language processing) algorithms are used. First, a tf-idf vectorizer is applied to the whole corpus. This collects all of the terms and weights them based on their frequency, while at the same time terms that are used too frequently and are overly common have their weights reduced. Similarly, stopwords are removed. In this way the text features are automatically selected.

The features are then piped into a multinomial logistic regression model. This model is good for wide datasets with many features, which is common with NLP problems.

Matching the Database

The list of scraped Craigslist posts has now been narrowed down to childcare center posts without duplication, which is about 20% of the original amount; already quite an improvement on readability. The next step is to see if any of these posts can be matched against the databases provided by the city. They have records on licensed operations, and leads on suspected illegal operations.

The search is done over the names and addresses, which can appear differently in the database compared to the Craigslist post. For example, many organizations have an Inc. or LLC in the database, but don’t included that in their postings. There are also address issues such as Ave or Avenue. This calls for a fuzzy full text search, which can be done in PostgreSQL. For these reasons it’s difficult to make a match, although it’s been done for about 10% of the posts so far. Further improvements are in the works as more is learned about the data, and as more Craigslist data is scraped.